6 Minuten

Object-based audio systems such as MPEG-H Audio are ideal for use in sports broadcasting, particularly in soccer. One reason for this is that top-class sport has always been a driver of innovation on and off the pitch. More important, however, is the fact that this sport in particular has a range of different acoustic elements that are ideally suited for use as customizable audio objects.

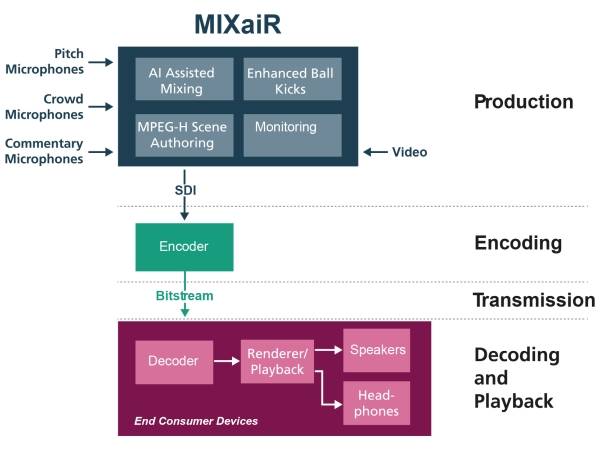

A classic transmission chain with MPEG-H audio support

In Brazil, the MPEG-H standard was selected in December 2021 as the only mandatory audio system for TV 3.0, the new generation of terrestrial broadcasting. This followed its inclusion in TV 2.5 (an intermediate stage between digital TV 2.0 and Next Generation TV 3.0) in 2019. MPEG-H Audio has been on air in regular 24/7 broadcasting via ISDB-T since 2021. The media giant Globo used the 2022 FIFA World Cup to test MPEG audio across the board for both TV 2.5 and TV 3.0. A UHD and HDR video signal was transmitted together with 16 audio channels, including 5.1+4H bed, commentary and other effects, from Qatar to Brazil. This is where the processing of the signals began, combining leading technologies from TV 2.5 and TV 3.0 with MPEG-H audio and using them in regular operation.

AI-supported tools make it easier to work with complex microphone setups.

At Globo in Rio de Janeiro, the audio data was enriched live with audio interactivity and personalization options using the Linear Acoustic Authoring and Monitoring System (AMS). This part of the production chain, known as "authoring", is a special feature of Next Generation Audio. In it, the properties of individual audio objects are defined via metadata. This enables new experiences for the audience: For example, they can select a different match commentator or acoustically bring the fan curve into their living room. In the case of MPEG-H Audio, all metadata is part of a PCM audio track that is combined with video and other audio content with frame accuracy. The personalization options provided are a unique innovation of the object-based audio system: for example, they allow the audience to switch from the home team's commentator to the visiting team's commentator or to adjust the volume of the speakers. Various settings can be combined into presets and made available for selection via a user-friendly menu on the end device.

Naturally, the type and number of audio signals changes significantly during the half-time break, as the live broadcast is interrupted by advertising or other content. Thanks to the robust metadata structure of MPEG-H Audio, all metadata can be changed abruptly during live operation if required. This changes the interaction options available to the audience during halftime. This means that only the content that is available at that time is offered.

The final step on the production side is to combine the audio and video signals and encode them in a single step. The TITAN Live Encoder from Ateme was used in Brazil for real-time encoding and streaming via DASH.

With flexible user interfaces, viewers can customize the sound of the football broadcast to suit their preferences.

One of the Globo studios in Rio de Janeiro was equipped with special transmission technology for the presentation of the new sound options. Among other things, an LG television was used to receive the live stream. Viewers were able to adjust the sound to their preferences via an MPEG-H audio user interface. The options made available are defined by the metadata created in the authoring and include the selection and setting options specified there. In this case, it was possible to choose between English and Portuguese commentary, increase or decrease the sound of the ball kicks and change the volume of the fan chants.

In Rio de Janeiro, the immersive sound of MPEG-H Audio could be experienced alongside the interactivity. Using HDMI passthrough, the MPEG-H bitstream is forwarded from the TV to MPEG-H Audio-capable AVRs and soundbars, where the signal is decoded, rendered and played back. During the test in Rio, Sennheiser AMBEO soundbars were connected in this way.

An app with many options in South Korea

The same World Cup on a different continent: SBS, one of South Korea's leading TV broadcasters, also took the opportunity to test a world first. MPEG-H Audio has been broadcast there since 2017 under the ATSC 3.0 standard on all channels. SBS developed a live streaming app especially for the World Cup, allowing the public to watch the World Cup matches: With the first regular MPEG-H audio streaming service with personalizable sound. The app made it possible to access the World Cup stream and adjust the sound to one's own preferences.

As in Brazil, the mix in South Korea was also based on the 16-channel signal from Qatar. This time, however, only presets were created during authoring. Such presets are the simplest type of interaction options, as they offer viewers different mixes, for example, which can be easily switched between. A "standard" preset is often used, which contains the original mix, and an "enhanced dialog" preset, which offers better speech intelligibility by lowering the background when speech is active.

In the SBS app, the audience could choose between the default settings "Basic", "Enhanced Dialog", "Site" and "Dialog Only". Such a high degree of personalization is an absolute novelty for live streams of sporting events. In South Korea, the "Basic" preset was created for viewers who appreciate the usual audio playback. "Enhanced Dialog" offered a more lively reproduction of the commentators and "Site" was designed to reproduce the pure stadium atmosphere without commentary.

And artificial intelligence can do it too: MPEG-H Audio in the Premier League

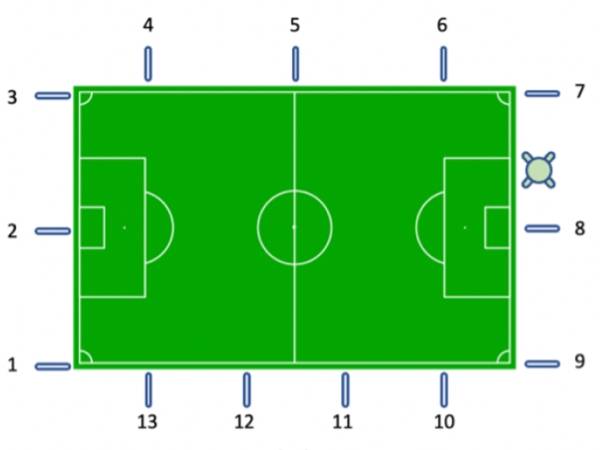

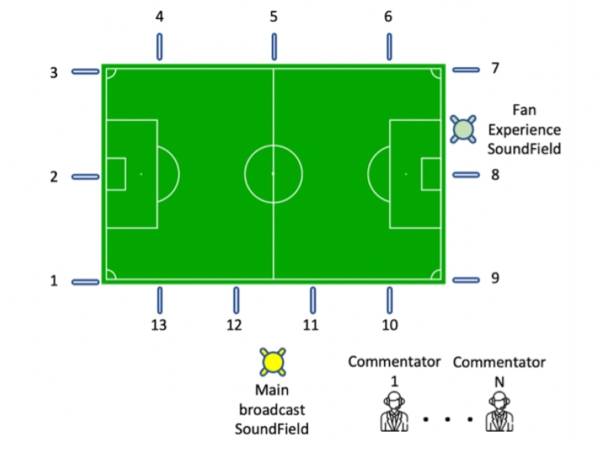

We have seen above that MPEG-H audio provides a more intense experience in soccer broadcasts. But long before audio objects are configured at the broadcasting station, a lot of work has already been done, directly on the pitch, so to speak. During a soccer match, a large number of microphones are used to capture the game in as much detail as possible. All these signals have to be mixed and controlled. In such a demanding live production environment, efficient and above all robust processes are crucial. Elite sport is one of the key drivers of technology development, where immersive sound and personalization have become an integral part of broadcasting and a coveted competitive advantage. Both require object-based approaches, which is why special tools for these new technologies need to be integrated into established processes - if possible without additional work for sound engineers. And this is where artificial intelligence comes into play: AI-based mixing software can significantly reduce the workload of sound engineers, freeing up time for other tasks. For example, machine learning can be used to manage a large number of microphone signals.

MixAir Workflow Flowchart: From the sidelines to the TV: Next generation audio technologies make state-of-the-art TV experiences possible.

The British company Salsa Sound uses this approach for Premier League matches. With the "MIXaiR" tool they developed, incoming audio signals are automatically combined into stems. The sound engineer can control and monitor this process and is significantly relieved by the AI. For example, the "pitch assist" function with AI support mixes the pitch microphones and also takes on the attention-intensive task of following the ball across the pitch. This allows the sound engineer to spend the time freed up on additional authoring tasks without compromising the quality of the mix.

The increasing use of next-generation audio systems such as MPEG-H Audio shows that the combination of AI tools, metadata authoring, and modern broadcasting options enables optimal adaptation to the consumption habits of today's audiences. New approaches are being developed and tested at an international level, particularly in sports broadcasting.

Even beyond the innovation driver of sport, the increasing use of object-based, AI-supported audio systems shows that these are slowly coming into widespread use: In the online media libraries of German broadcasters, for example, they provide a remedy for dialog that is difficult to understand. With the AI-supported technology MPEG-H Dialog+, an alternative version with lowered sounds and music can be created from existing audio mixes. Audio objects can also be used here to provide personalization options such as adjustable dialogue volume.

Aimée Moulson

Aimée Moulson graduated from the University of Huddersfield with a degree in Music Technology and Audio Systems. After working as an engineer in the broadcast industry, Aimée received her M.Sc in Broadcast Engineering from Birmingham City University. Since 2021 she has worked at Fraunhofer IIS as a Sound Engineer, producing immersive and object-based content for the MPEG-H Audio ecosystem. She specializes in immersive audio for sports productions and systems integration for Next Generation Audio workflows.

Article topics

Article translations are machine translated and proofread.

Artikel von Aimée Moulson

Aimée Moulson

Aimée Moulson