10 Minuten

site-unspecific is a four-track album of electronic music originally composed in ambisonic format. It is an outcome of the artistic research project “Embodied/Encoded: A Study of Presence in Digital Music” funded by the Norwegian Academy of Music, 1, with additional resources connected to Natasha Barrett’s “Reconfiguring the Landscape” project.2 “Embodied/Encoded” explores presence from the conceptual vantage points of extended reality and embodied music cognition, through practices of recording, creative coding, spatial audio production, and electroacoustic music composition.

The sites and spaces evoked in the compositions are not specific, but carry the sonic ephemera that characterize real-world acoustic experience. Scenes are filtered and fragmented in the way that present moments are reimagined as we encounter them, expanding into vast spaces of mind and body.

This article discusses technical aspects of the site-unspecific composition, Sill Life, from its production in spatial audio settings to its eventual reduction to stereo format. Some practical issues around ambisonics are examined, particularly the transition between different studio and concert setups and the devising of decoding schemes for each. Further reflection is given on bespoke synthesis routines with spatially distributed sound events, and how these functioned in ambisonic space when encoded as virtual sources.

FluCoMa in SuperCollider

How can the ephemeral character of real-world acoustic sound be creatively inflected/affected by digital structures? How might the oscillation between acoustic and digital archetypes inform a procedural and poetic approach to composition? This is the line of questioning that informed the creative methodology of site-unspecific, exploring the reconfiguration of real-world presence through digital techniques.

The album is autobiographical, made predominantly from field recordings of sites in Oslo, Norway during my time as a Research Fellow. It conveys nomadic metaphors and feelings of solitude which I experienced as an immigrant after moving at the height of the COVID-19 pandemic in 2020. Conceptual and technical aspects of the works are well-documented in the Research Catalogue.3 Here, I offer a deeper analysis of sound design and production techniques devised for the work Still Life, composed in 2021.

Early in the Fellowship period, I began working with the Fluid Corpus Manipulation (FluCoMa) suite of externals for the programs SuperCollider and Max. FluCoMa enabled access various perceptual dimensions of recorded sounds with accuracy and flexibility.4 FluCoMa contains some audio slicers, which identify different types of onset characteristics in an audio stream and output the data for use in other processes. Slicers can be configured to isolate perceptually salient sound events according to various analysis criteria.

All works on site-unspecific implement onset analysis and slicing in some way, particularly in the form of algorithmic re-orderings of slices coded in SuperCollider. Re-orderings can be procedural or based on perceptual criteria such as pitch, loudness, or duration information (for example, order slices based on the average pitch of each, from low to high). With this method, I could address long time spans of recorded materials and audition generative musical processes driven by different analysis methods.

For Still Life, I worked with source recordings of vocal improvisations by mezzo-soprano Marika Schultze, and field recordings captured in Oslo, Norway, and Gainesville, Florida. Slicers were coupled with two different methods of granular synthesis native to SuperCollider: in the first, I used the GrainBuf UGen to construct granular streams from a sound file with audio rate precision, but with limited access to individual grain parameters. GrainBuf was used for synchronous granular synthesis, in which the grain frequency is periodic, imposing modulable formant peaks onto sources. As microsound enthusiasts are aware, the grain envelope in this case plays a significant role in the artificially imposed resonance structure. Smooth envelopes such as the Hann window were used to create sonorous, legato lines, and sharp, percussive envelopes for broadband textures. In Example 1, pitch analysis of the vocal source (in Hz) was mapped to the frequency of the synchronous granular stream:

Example 1: Still Life

In the second approach, I used the OffsetOut UGen to schedule a sampler with sample-accurate timing. This approach afforded a lower-level control of individual grains, with the ability to apply time-varying envelopes to grain pitch or to spatialize individual grains.

An example of the latter can be heard from approx. 4:04-4:53 of Still Life. In this section, source recordings were sliced according to changes in spectral energy. Each slice was further divided into 4 equal parts, iterated in separate channels (eight total channels for stereo sources). I chose a series of beat subdivisions for each slice, over which the four slice parts would recycle. The rhythmic subdivisions remained constant across different input sources.

My idea was to compose a series of metrically dissimilar pulse groups, iterated in sequence, and imposed on multiple sound sources. These are then layered together, each unfolding uniquely but bound to the same predetermined rhythmic structure. Disparate sources become linked by common behavior.

The separate audio channels were later spatialized in ambisonics, moving from the front to the back of the listener at equal divisions of the horizontal plane. In the case of percussive or punctuated sources, attack portions of slices are localized to the front of the listening space and release portions at the back, with two interpolating stages.

The sound example (Example 2) begins with a synthesized kick drum and pitch-shifted snare sound, eventually joined by a frequency-modulated voice – all pulsating in synchrony.

Example 2. Still Life

Ambisonic Tools

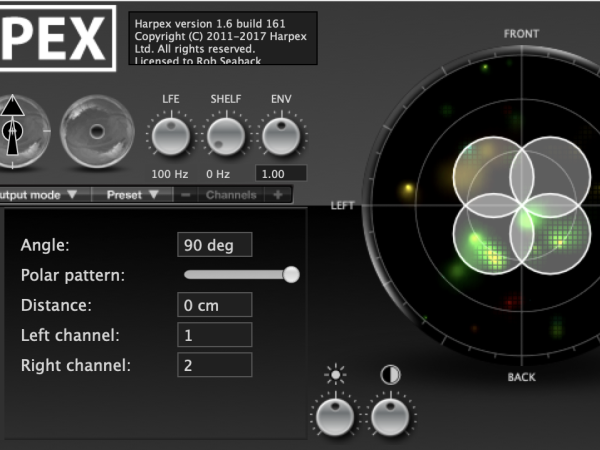

Compositions of site-unspecific were assembled in Reaper with ambisonics plugins by IEM, ambiX, and Harpex. Harpex was used for decoding in conventional studio spaces, i.e., stereo, quadrophonic, 5.1, 7.1, and octophonic studios. Harpex ameliorates the destructive effects of low ambisonic orders with active matrixing.5 It was an invaluable tool during the COVID-19 era when many studio spaces were not accessible and it became necessary to develop an ambisonic workflow in my home studio.

Harpex also allowed ‘up-sampling’ the ambisonic resolution of recordings made with the Soundfield SPS200 microphone – from FOA to 3OA. Using the SPS200, despite its limited spatial resolution, was a pivotal learning experience in developing a methodology for spatial audio composition. Vocal recordings for Still Life were made with the microphone in a dry studio space as well as the reverberant Levinsalen at the Norwegian Academy of Music (Figure 1). While the dry recordings were useful in many ways, the reverb of Levinsalen provided a powerful reference point for the degree of immersion I desired from my music in surround loudspeaker configurations. There were no spatial gaps in the sound projection once decoded, which was easily sensed from the lush reverberation. This contrasts methods of synthesizing virtual ambisonic sources from mono or stereo files, which require post-production or spatial synthesis strategies for a similar effect.

In my compositional approach, I utilized the full spatial immersion of sound field recordings as an expressive parameter, while synthesizing conventional recordings to contract the image to a smaller area or disperse the image as point sources. In Still Life, sound field recordings from Levinsalen are incidental sounds – subtle vocalizations and body movements – which add a mysterious spatial depth to the work at a low dynamic level, encouraging deep listening.

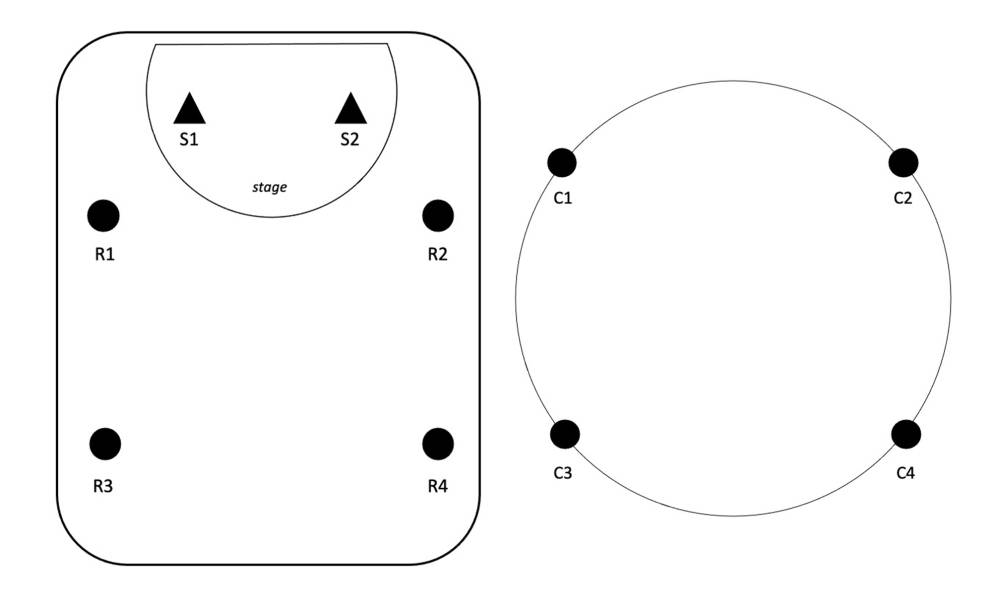

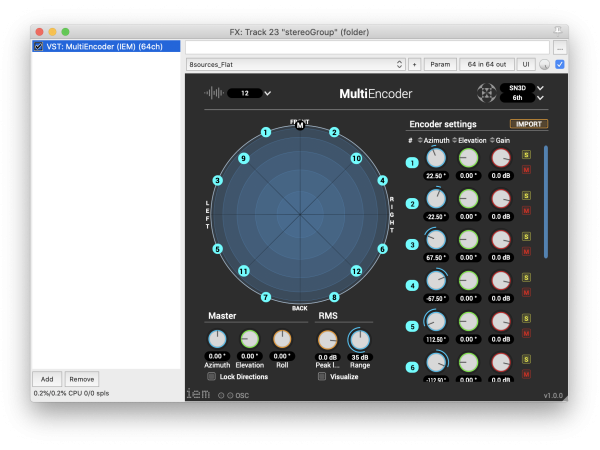

Multichannel sound files synthesized in SuperCollider were encoded as virtual sources arranged in basic spatial patterns, as shown in Figure 2. Here, sources are grouped in left/right pairs, beginning at the front of the listener and evenly distributed to the back. This arrangement is somewhat perceivable in the previous sound examples, which were decoded to stereo for this presentation.

Figure 2. Virtual source distribution with IEM MultiEncoder Plugin

Surround Reverb in Still life

The approach to artificial reverb in Still Life was convolution with a set of surround impulse responses recorded from two source positions.6 I used the mcfx_convolver plugin from the multichannel audio plugin suite by Matthias Kronlachner, but any convolution plugin could also work for this method. I used the plugin’s left input as source 1 (see Figure 3), convolved with the four receiver channels, and the right input as source 2. Panning the input (pre-FX) from left to right would crossfade between source/receiver sets from the two source positions.

The four-channel output was then encoded as four virtual ambisonic sources, simulating the mic arrangement of the IR capture. This allowed for reverb with higher spatial resolution than what can be achieved with B-format impulse responses. In early versions of Still Life, the reverb was encoded in 3OA to exploit the diffuseness of lower orders, while early reflection components were simulated as a chain of short delays, encoded in 6OA.

Figure 3: IR source (S) and receiver (R) positions and convolved signals (C) as ambisonic virtual sources.

Ambisonics in Concert

Still Life was premiered at a self-produced concert at the Norwegian Academy of Music (NMH) on October 2, 2021. It was presented again shortly thereafter at IEM, Graz for the Student 3D Audio Production Competition, where it was awarded the Gold Prize in the Computer Music Category. In 2022, the work was presented over 22 loudspeakers at the Sound and Music Computing Conference in St. Étienne, France, and over 16 loudspeakers at the International Computer Music Conference in Limerick, Ireland. The most recent presentation was the 2023 Ultima Contemporary Music Festival, in a 16 loudspeaker 2D configuration.

In cases when loudspeaker arrays of sufficient density are available, the Spat5 package of Max externals has been the preferred tool for decoding. This is due to the overall flexibility of the decoder, with options to experiment with different decoding methods and optimization strategies. In the latter case, I have found the “In-Phase/Max-Re” setting to be effective, which applies optimization strategies to low and high frequencies respectively at a user-defined cross-over frequency. Additionally, speaker calibration is convenient with the spat5.align~ object, which receives the same loudspeaker definitions as the decoder.

The decoding stage of ambisonics is certainly the most pivotal, and often the most problematic in concert settings. It was challenging, for example, to acquire accurate information about speaker positions at the aforementioned events, and time was severely limited to check the calibration or test more than one decoding method. Anecdotally, there are inconsistencies within the academic discipline of sonic computing with regard to concert production. Degrees of professionalism in this domain vary widely from event to event, conference to conference. A poorly decoded ambisonics piece may sound lo-fi compared to channel or object-based spatial audio works, thus negatively affecting audience engagement or giving the impression of poor production.

In Oslo, this situation was manageable because of the availability of high-density loudspeaker arrays for rental (from the 3DA audio group), and the semi-regular production of spatial audio concerts by Notam or Electric Audio Unit. Artists and producers for these concerts are typically specialists with deep experience in spatial audio. For my concert at the 2023 Ultima Festival, I acquired speakers from NMH with additional Genelecs from 3DA to maximize the ambisonic order. I also worked with an on-site production team to set up and calibrate loudspeakers. The process took many hours and is a testament to the necessity of detailed planning, ample setup time, and a skilled labor force for ambisonics concerts.

Decoding in Stereo

Reducing the 3D sound image to stereo was the last challenge for site-unspecific compositions. Naturally, the visceral, full-bodied experience of immersive audio formats is an inspiration for this work. However, I am still satisfied with simple stereo listening and eager to reach audiences in this widely accessible medium.

I experimented with presets in Harpex that simulate typical stereo miking techniques for decoding. The Blumlein preset, simulating two bi-directional mics in a perpendicular arrangement, was well-suited for capturing much of the 3D image. It generates a very wide stereo spread with no volume loss in the back. However, this preset did not work well for all materials. In the worst case, transient smearing occurred with broadband sounds that moved rapidly between different spatial positions. ORTF, NOS, and XY simulations were much more stable for this material. In other cases, coincident and near-coincident pairs were simply used to fill in the stereo space – to create centered focal points, closer to the commercial standards of stereo production.

I created stems according to the spatial profile of sound materials, each rendered from a different stereo decoding preset: XY simulation for the narrowest reduction and Blumlein for the widest and most complete. Finally, stems were sent for professional mastering.

Figure 4.1. Blumlein stereo settings in Harpex

Figure 4.2. ORTF stereo settings in Harpex

Summary

The composition of Still Life was a technical exploration of sound analysis and decomposition tools, algorithmic processes, experimental recording, and ambisonics. I hope that details of ambisonics from the standpoint of artistic practice rather than scientific research will be useful to readers, particularly the negotiation between immersive concert settings and commercial listening formats. Methods of sound processing and instrument design may serve to stimulate the creative mind – and their integration within an ambisonics workflow serves as a practical demonstration.

The methods and tools leave their mark on the virtual listening experience as real objects and spaces are absorbed by digitization and creatively reconfigured. But ultimately, Still Life is not a technical exercise. Techniques described in this article were all means to an end: the manifestation of a poetic space capitalizing on the corporeal and emotional potentials of the sounds at hand.

- 1

Robert Seaback, “Embodied/Encoded: A Study of Presence in Digital Music,” Research Catalogue (2024). https://doi.org/10.22501/nmh-ar.1397388.

- 2

Natasha Barrett, “Reconfiguring the Landscape,” Research Catalogue (2024). https://doi.org/10.22501/rc.1724428.

- 3

Seaback, "Embodied/Encoded".

- 4

P.A. Tremblay, et al., "The Fluid Corpus Manipulation Toolbox", Zenodo (Juli 7, 2022). https://doi.org /10.5281/zenodo.6834643.

- 5

Harpex LTD, "Technologie", https://harpex.net/ (Zugriff am 4. Mai 2022).

- 6

I did not create these IRs myself. They were shared with me by a colleague.

Robert Seaback

Robert Seaback is a composer and sound artist from the US, currently based in Florida. His music expresses viscerality, the hyperreal, digital stasis, and continuums between – integrating voices, instruments, soundscapes, and synthetic sources through digital techniques. He has composed or performed in mediums including spatial audio, mixed music for ensemble, electroacoustic improvisation, and sound installation. His music has been presented at numerous international events and has been recognized with several awards from notable competitions.

Article topics

Article translations are machine translated and proofread.

Artikel von Robert Seaback

Robert Seaback

Robert Seaback