6 Minuten

The human voice is probably the oldest, most complex, and most versatile musical instrument—and the hardest to imitate, both analog and digital.

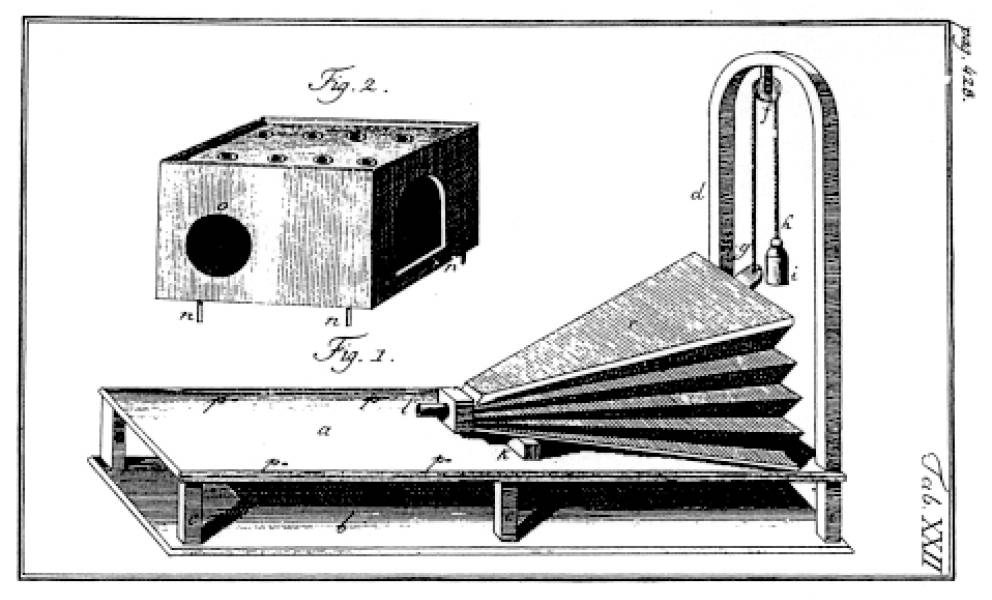

The first successful attempts to create a speaking voice detached from the body date back to the 18th century.

Wolfgang von Kempelen developed the first functioning speaking machine, capable of producing at least some intelligible speech sounds.

Von Kempelen attempted to replicate the human vocal apparatus using wood, rubber, leather, and ivory. The apparatus was played with the hands and forearm, and its intelligibility depended heavily on the virtuosity of the player.

The speaking machines of the 19th century also resembled musical instruments, both visually and in the way they were played. The speaking machine Euphonia, for example, was operated via a keyboard and foot pedals, strongly reminiscent of an organ, despite its theatrical presentation with a woman’s head.

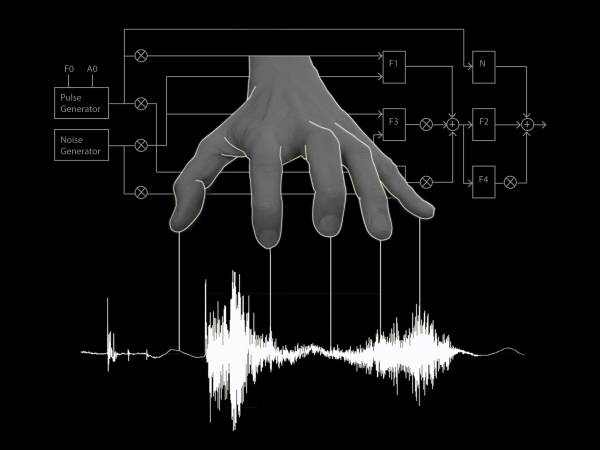

The first electrically generated speaking voice - the Voder - sent a source signal through various variable filters controlled via hand buttons. A wrist switch alternated between voiced and unvoiced sounds, while a foot pedal controlled the fundamental frequency.

Although these early speaking machines were not originally intended as musical instruments but rather for scientific or technical purposes, they were played like musical instruments.

Their operation was highly physical and auditory-haptic, linking the sensorimotor system directly to the phonetics and acoustics of the artificial voice. Playing these instruments required learned and trained fine motor skills - often developed over years of practice. My self-built interfaces for electronic voice, described below, similarly focus on a physical approach to playing and its connection to vocal and speech sounds.

The Act of Painting Voices

My first work on artificial voices began with spectrograms:

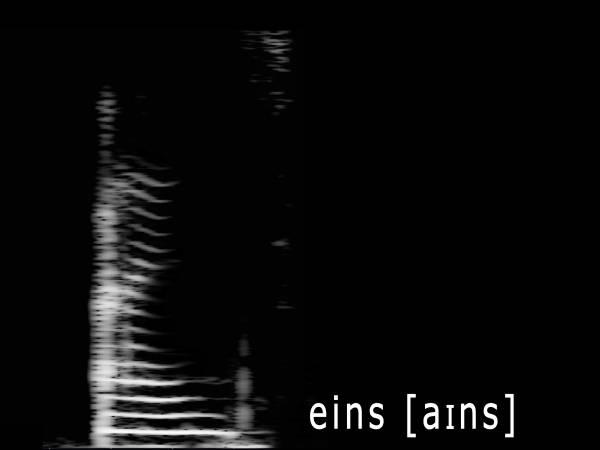

Spectrograms are visualizations of sounds, spreading frequencies along a time axis - a kind of "fingerprint" of sound that reveals its spectral properties.

Spektrogramm des gesprochenen Wortes „eins“

In previous projects, I had already experimented with sonifying images by scanning them from left to right using sine tone generators. The realization that any image could be interpreted as a spectrogram and thus sonified led me to the idea of creating specific sounds using hand-drawn spectrograms - with a particular interest in human voices.

Hand-drawn Voice Archives

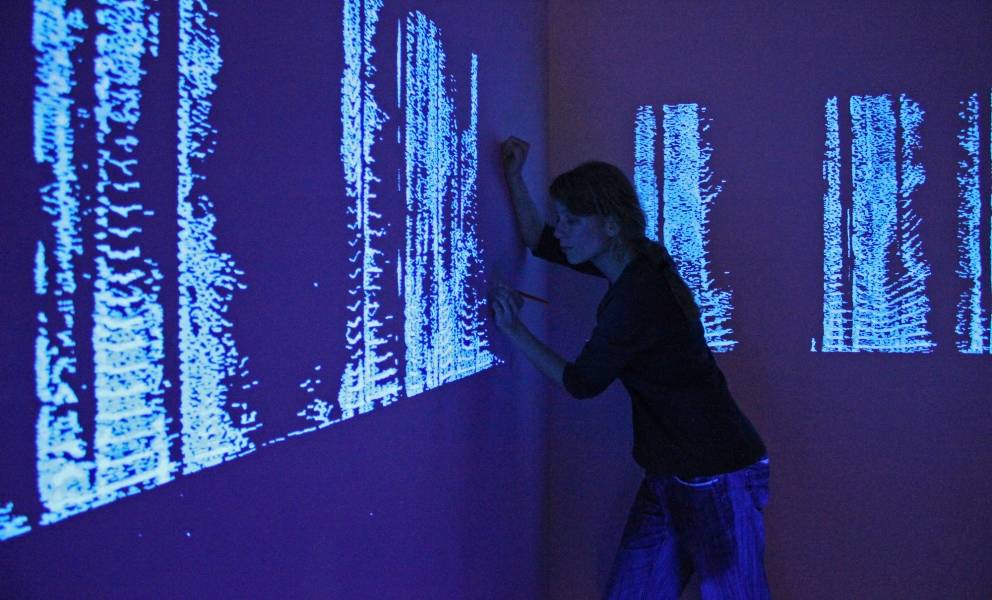

In the project Sound on Drawing, I paint spectrograms of voices onto walls using UV paint. Depending on the context, these spectrograms represent excerpts from interviews, poetic texts, or statements on language and identity. The space becomes an archive of inaudible voices, which can be made audible under specific conditions using inverse FFT(Fast Fourier Transformation).

„Ton auf Zeichnung“ in der Ausstellung „FINE SOUND – keine medienkunst“, das weiße Haus, Wien

This process of drawing voices is both intense and revelatory but also laborious and slow. In the follow-up project "Sound Calligraphy", I realized the idea of drawing voices in real-time during a live performance while simultaneously sonifying them - in other words, to “speak” through drawing. As part of the PEEK project "Digital Synesthesia", I developed a calligraphic drawing system in which signs and spectrally encoded sounds merge. By progressively reducing the complexity of speech spectrograms, I arrived at a minimal yet sufficient representation for each speech sound—simple enough to be drawn in a few strokes while still retaining enough spectral information to be recognized when sonified.

During the performance, I draw these vocal calligraphies on paper, which are filmed live and sonified in a loop. In this way, a text gradually unfolds, word by word, allowing the hand-drawn voice to “speak” about its own disembodied presence and identity.

Klangkalligrafie: Performance in der Ausstellung DIGITAL SYNESTHESIA, Angewandte Innovation Lab Wien

Initially, this artificially generated voice is barely comprehensible, but with each additional stroke, fragments of speech become perceptible.

The Hand and the Voice - "Speaking and singing" Performance Instruments

With my self-built interfaces, I aim to control and perform digital and electronic sounds with the body, creating a meaningful connection between action, bodily response, and sound using sensors - similar to a traditional musical instrument. By integrating internal bodily processes as control signals, the body becomes even more directly involved in the musical interface.

Over the past years, I have developed a series of interfaces for electronic voice. My goal was to work with artificially generated or transformed voices in live musical performances. These instruments were designed both to extend and electronically augment my own voice—detaching it from my body—and to control artificial voices through gestures and body movements, akin to digital ventriloquism.

The voice interfaces aim to approximate the complexity of the human voice by enabling precise control over its key parameters.

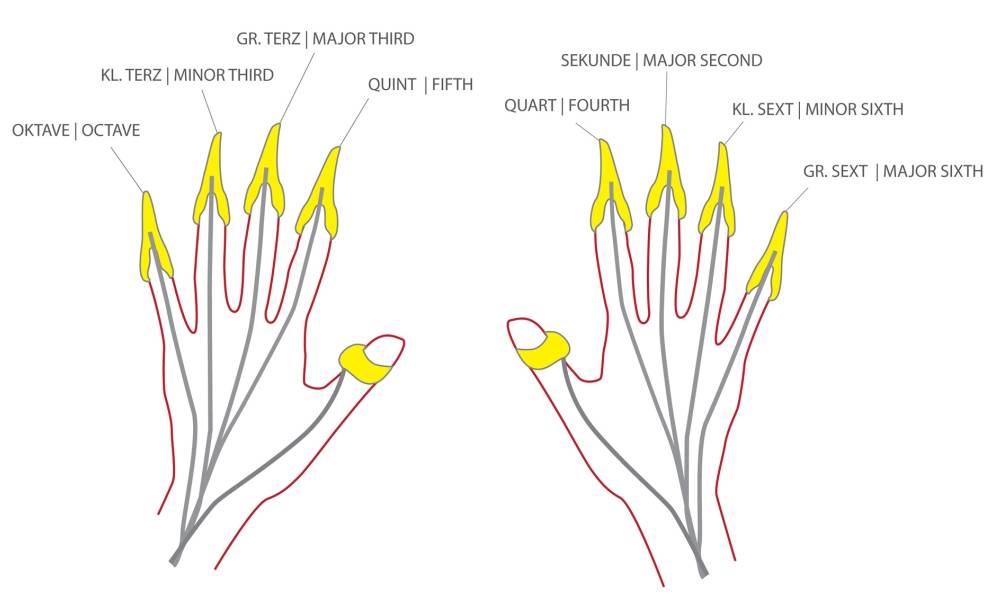

The first live instrument I developed for voice performance was the "Fingertip Vocoder", an interface worn on the fingers - initially wired, later connected via USB. Brass fingertip rings function as sensor switches, controlling a Pure Data patch that multiplies the performer's voice at specific intervals to create polyphonic sounds. The Fingertip Vocoder makes it possible to sing choral pieces solo, with chords being generated by different fingertip combinations, much like fingering techniques on a piano.

I extended the functionality of the Fingertip Vocoder by integrating a Leap Motion Controller. This infrared sensor detects hand and finger positions in space, allowing additional parameters to be controlled: for instance, the left-right movement of the hand can shift the individual voices between the left and right audio channel, distributing them spatially.

I also began to work spectrally with live-recorded or artificially generated voice material, navigating through the audio track as if moving through a three-dimensional sound space. Hand movements control the cursor scanning the sound file, and vocal sounds can be "frozen" at any point, remaining as continuous tones or noise, depending on whether the captured sound was voiced or unvoiced.

This process reveals the inherent musicality of spoken language itself.

Light Scans through the Body of the Voice

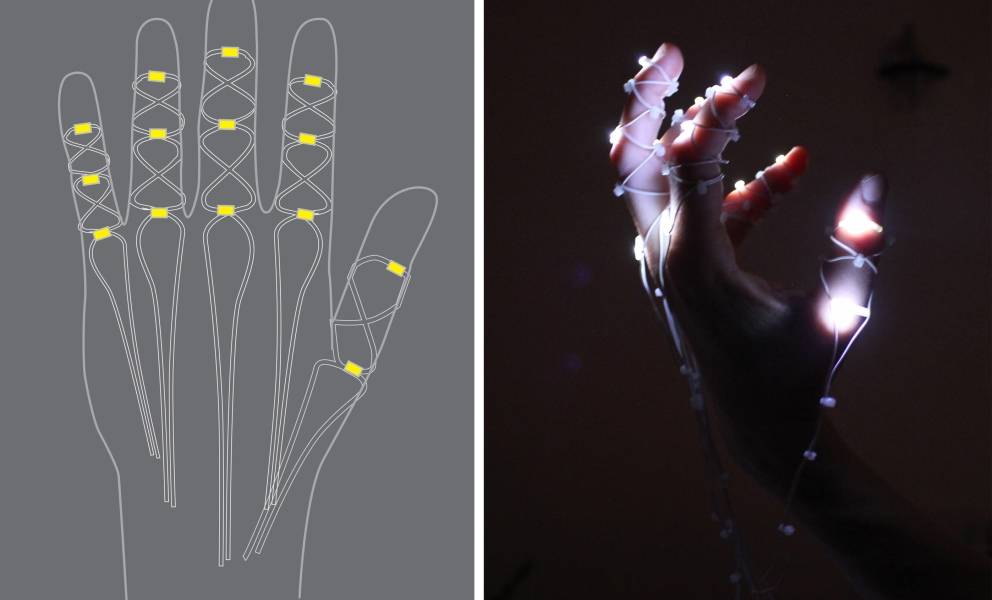

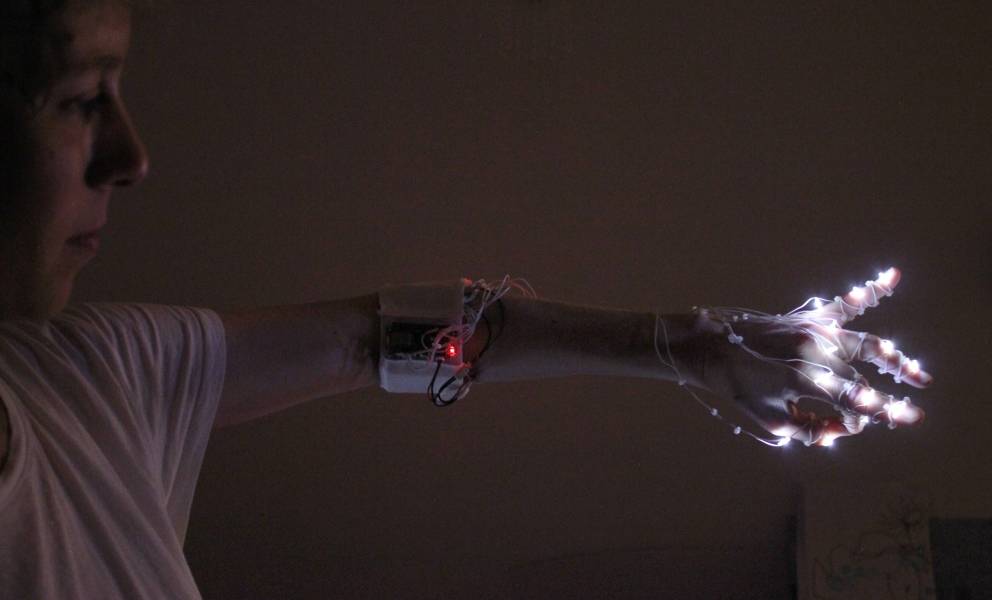

In the "Talking Hands" project, I replaced the fingertip rings with precise tracking of fingertips via the Leap sensor and designed a net-like LED interface covering the hands and fingers. This visualization makes the currently active sound layer visible, showing where I am within the “vocal space.”

For the first prototype of "Talking Hands", I combined gestures and movements with the speech synthesizer DECtalk. Finger and hand gestures activated speech sounds sonified by DECtalk and processed in real time in MaxMSP. Hand movements control both intonation and volume, enabling melodic speech to some extent.

However, I wanted to work with a more natural-sounding artificial voice and explore its spectral qualities through gesture-based interaction. I generated a synthetic voice on the Resemble AI platform, modeled on my own voice. Integrated into a live performance workflow via Python, this voice serves as the basis for spectral manipulations in MaxMSP. The hand navigates through the time axis while simultaneously shifting through the overtone spectrum, modifying the timbre through subtle finger movements and hand rotations.

Digital Choirs and the Voice as a String Instrument

In my current performance "All of My Voices", I start with minimal vocal input and gradually generate an artificial choir that expands in space - initiated and conducted via the Fingertip Vocoder. In the second part of the performance, I play the "Voicebow", an electronic interface that transforms the voice into a string instrument by mapping it onto a monochord. Here, the voice merges with the string, expanding its potential as a manually played live instrument, enabling a spectrum from percussive consonants to bowed vowels.

Further Development

Technically, the potential for further development of my voice interfaces lies in the progress of AI-based voice synthesis.

While AI voices now exhibit high sound quality, their latency remains a challenge for real-time live performance. I am therefore also interested in vocal tract models that allow direct manipulation of parameters such as lip and tongue position via sensor input. A virtual vocal tract, controlled in real time through an interface, could enable intuitive, real-time interaction, also making non-verbal and experimental sounds more expressive.

Musically, I am working on integrating the Fingertip Vocoder’s pitch-shifting capabilities with the spectral manipulations of the Talking Hands system. My goal is to create a seamless transition between my own voice and sensor-controlled synthetic sound artifacts.

As a live instrument, the sensor-based voice continues to open new fields of musical exploration for me.

Ulla Rauter

Ulla Rauter works as a media artist and musician at the interface of sound art and visual art - her works include performative sculptures, musical performances and self-built instruments. Her sensor-based, electronic musical instruments are shown internationally in performances and exhibitions. Central themes in her artistic work are silence as a material and place of longing and the human voice as the source material for translation and transformation processes. Ulla Rauter is co-founder and organizer of the annual Klangmanifeste audio show. She has been teaching at the Department of Digital Art at the University of Applied Arts Vienna since 2013.

Article topics

Article translations are machine translated and proofread.

Artikel von Ulla Rauter

Ulla Rauter

Ulla Rauter