12 Minuten

Shouldn't your next musical or artistic project have something to do with artificial intelligence? In 2021, the impression is that this could be beneficial, and critical voices are even talking about hype. One thought in advance: the more frequently artistic projects integrate AI, the more sensible it seems to me to differentiate between them. Are we dealing with the application of AI, a (soon to be) general tool? Or are we rather experiencing an appropriation of AI, i.e., an independent artistic position with and on AI, using a formal or aesthetically or substantively critical examination? More on this in part 3 of the short series of texts on AI & music/sound art.

The focus of the first part is different. When projects using neural networks and machine learning are also promoted and marketed in music and art because of their innovative potential, it often goes unmentioned that artificial intelligence has not just been around since the 2000s. Moreover, early forms of AI are not even known in some contexts: starting with the beginning of cybernetics in 1943, when Norbert Wiener joined forces with John von Neumann, engineers, and neuroscientists to study the similarities between the brain and computers. Wiener's book "Cybernetics: Or Control and Communication in the Animal and the Machine" was published in 1948 (Wiener, 1948)1. It is the first public use of the term cybernetics. According to Wiener, cybernetics is the science of control and regulation of machines and its analogies to the behavior of living organisms (due to feedback through sensory organs) and social organizations (due to feedback through communication and observation). (This is also important for artificial intelligence. In 1950, Alan Turing formulated an idea in what later became known as the Turing test to determine whether a computer had the same thinking ability as a human being. He originally called this test the imitation game. In the context of early AI, many rule-based systems could also be mentioned, e.g. Markov chains (Markov, 1906), in which probabilities for the occurrence of future events are determined using a stochastic process, or cellular automata (Cellular Automaton; von Neumann, 1951), the Lindenmayer system (Lindenmayer, 1968), etc.

Figure 1: Markov chain with three states and incomplete connections

Figure 2: Elementary Cellular Automaton with the first 20 generations of rule 30, starting with a single black cell, Wolfram MathWorld

Figure 3: "Weeds", generated with a Lindenmayer system (L-system) in 3D

The historical classification of the most diverse strands of early AI can only be seen as an attempt to be understood. One narrative is that the formal logic-based approach made public in the 1950s (represented by the founding fathers of cybernetics in the USA) was contrasted with the alternative approach originating in England, in which the transfer of biological concepts to experimental setups of all kinds was tested. While the former, techno mathematical approach promoted talk of the "electronic brain", the development of the digital computer or the von Neumann architecture, and many paradigms of computer science, the latter focused on prominent representatives such as Ross Ashby, Stafford Beer, and Gordon Pask's observations on the relationship between system and environment (Donner, 2010). The author Katherine Hayles, among others, offers alternative attempts to categorize cybernetics in three phases. (Hayles, 1999)

But what does all this have to do with music and sound/art?

Let's take a look at the period from the 1950s onwards, with a focus on the early 1980s - one could also put forward the thesis: the second wave of AI.

Once again, it is the USA where interesting developments are emerging. Many personalities from the world of music are involved. Admittedly, it is a heterogeneous group in terms of content, but otherwise remarkably homogeneous once again: virtually all the protagonists are or were male, and almost all of them are white. (More on a critical reflection of this homogeneity in Part 2 and Part 3 of this short series of texts). What were the thematic approaches and approaches, what are the achievements of all those who have probably never been heard of today?

They include researchers, composers, and musicians such as Marvin Minsky, David Cope, John Chowning, George E. Lewis, Curtis Roads, John Bischoff, and many more.

George E. Lewis describes the American cognitive and computer scientist Marvin Lee Minsky (1927 - 2016) as having a formative influence on the developing scene of that time. Together with John McCarthy (the inventor of Lisp), Nathaniel Rochester, and Claude Shannon, he founded the concept of artificial intelligence at the Dartmouth Conference in 1956. Later, he and Seymour Papert were also founders of the Artificial Intelligence Lab (AI Lab) at MIT (Massachusetts Institute of Technology in Cambridge). Together with Edward Friedkin, Minsky created the Triadex-Muse-synthesizer in 1972 (cf. cdm, 2014). Much earlier (1951), he and Dean Edmonds developed a neural network computer called SNARC (Stochastic Neural Analog Reinforcement Calculator), which simulated the behavior of a mouse in a maze:

What magical trick makes us intelligent? The trick is that there is no trick. The power of intelligence stems from our vast diversity, not from any single, perfect principle. (Minsky, 1987)

In 1964, John Chowning (born 1934), with the help of Max Mathews from Bell Telephone Laboratories and David Poole from Stanford University, created a computer music program that used the computer system of the Stanford Artificial Intelligence Laboratory. In the same year, he began research into the simulation of moving synthetic sound sources in an illusionary acoustic space (cf. Chowning 2010). In 1967, Chowning discovered the algorithm of frequency modulation synthesis, FM (cf. Chowning, 2021).

It is not possible in the brevity of this article to pay due respect to all the protagonists, but I would like to briefly mention David Cope (born 1941), a former Professor of Music at the University of California, Santa Cruz. Cope is also interested in the relationship between artificial intelligence and music. His EMI software (Experiments in Musical Intelligence, see Cope, 1996) has produced works in the style of Bach as well as Bartok, Brahms, Chopin, Gershwin, Joplin, Mozart, Prokofiev and even David Cope, some of which have been commercially recorded (Cockrell 2001) - from short pieces to full-length operas.

The developments at Mills College in Oakland were also remarkable at the time. It was here that Jim Horton, John Bischoff, and Rich Gold founded the League of Automatic Music Composers in 1977, playing concerts together with computers that were networked with each other. Early forms of AI should probably be viewed against the backdrop of the possibilities of the software and hardware available at the time; it was the time of the Commodore, the first personal computer on the market:

For the first time, the networking of several controlled computers made it possible not to limit the interactions between the players to signals, but to intervene directly in the game of the individual users by exchanging data between three computers or even to derive a musical product from all the collected actions (Medienkunstnetz, 2021)

In 1983, the League of Automatic Music Composers disbanded, and the successor project The Hub, in which John Bischoff collaborated with Tim Perkis, Scott Gresham-Lancaster, Chris Brown, Phil Stone, and Mark Trayle, was also dedicated to the potential of the computer as a live musical instrument. The classic The Hub stand-alone machine piece was called Waxlips (Boundary Layer, 2008). Scott Gresham-Lancaster describes the collaboration as follows:

We were all completely autonomous, we all had different instruments, one had an Atari, another one a Commodore Amiga, […] or Oberheim xpander with pitch to midi converter. We had a set of rules for the transition networks, if you get a c you have to change the pitch, so you give out a b flat to someone else. Mark Trayle was a professional coder but we others were just composers, not really programmers. The systems crashed during the concerts. (Gresham-Lancaster, 2020)

It was a completely new way of playing music. The connection between input and output, the derivation of new information from incoming information became the focus. They used typewriter keyboards, for example, to enter commands without knowing exactly when a sound generator would convert them. As Scott Gresham-Lancaster puts it:

[everything was] happening in the timescale of the moment, happening in the NOW. (Gresham-Lancaster, 2020)

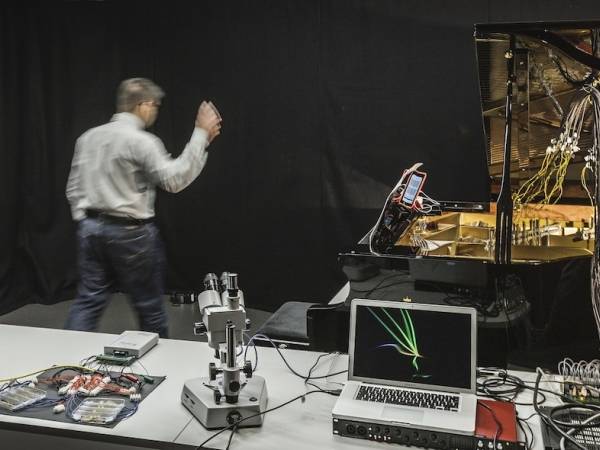

Even George E. Lewis' best-known composition Voyager could not look ahead even 5 minutes, according to Lewis; it is based on the concept of an immediate dialog with the environment. Lewis (b. 1952), a trombonist from the avant-garde jazz scene, and Edwin-H. Case, a professor of music at Columbia University in New York, composed a new piece entitled Rainbow Family in 1984 during a residency at IRCAM in Paris. Like its famous successor Voyager, Rainbow Family was conceived as an interactive work for one or more human instrumentalists and an improvising orchestra. In this composition, the orchestral textures came from a trio of Yamaha DX-7 synthesizers controlled by Apple II computers running Lewis' software. At the heart of the software was a group of algorithms that generated music in real-time and simultaneously generated sonic responses to the playing of four improvising soloists: Derek Bailey, Douglas Ewart, Steve Lacy, and Jodle Leandre (see Lewis, 2007). The dialog between machine and human emerged like a game, one could perhaps also say that it involved cybernetically based ideas of feedback, first with a few, later with 64 channels of dialog.

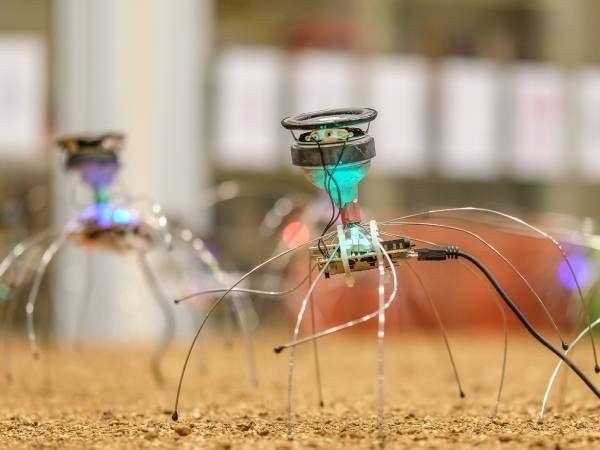

As a third musical example of early forms of musical AI, I would like to mention the concept of David Tudor's Neural Network Synthesizer. It emerged from a collaboration between Tudor and Forrest Warthman (with the help of others); they had met through a performance by Tudor with the Merce Cunningham Dance Company in Berkeley in 1989. Technologically, the neural network chip forms the heart of the synthesizer. It consists of 64 non-linear amplifiers with 10240 programmable connections. Each input signal can be connected to each neuron, whose output can be fed back to each input via on-chip or off-chip paths - each with variable connection strength (cf. Warthman, 1994). The neural network synthesizer with which Tudor played live was based on a sophisticated musical feedback system.

But are all these examples really artificial intelligence? Or how can AI even be defined in the field of music? George E. Lewis argues:

Defining artificial intelligence is as problematic as it is to define any other kind of intelligence. I was interested in a kind of machine subjectivity that is to say I was trying to build something that could communicate with the other human performers. […] People would say “well, it doesn't understand me” when I do this. It doesn't get it and so you had to figure out, how to get it understands of what it was hearing, what kind of feature extraction and then what it would play in response. The idea that it kept playing the same thing as a response, that'll be very boring and so you want to have a number of potentially possible outcomes and you also want to have something, that can play differently with different people. Because, if people play differently, your feature extraction algorithm should reflect that. You can [even] leave the room, it keeps playing and it starts doing stuff. And then you come back and for an artist at some point one of the most important things you can do for me as a system like this is to have it to ignore me from time to time. […] and if that's AI I am ok to go with that. I mean its not the Lisp and Prolog kind of AI…

(Lewis, 2020) (Lisp see note 2/ Prolog2 / AI see note of the author3 )

Scott Gresham-Lancaster argues:

Communication is central. The network ATN [Adversarial Transformation Networks], that is a part of linguistics, that really influenced the early work. (Gresham-Lancaster, 2020)

Interestingly, the understanding of AI has changed significantly over the last 40 years. Many early concepts and approaches were not understood as AI by the protagonists of their time, but today they are often regarded as such (see Brümmer 2020). About the past, Palle Dahlstedt says:

Maybe it wasn't AI. But I mean we can see, that it has that role, having some kind of agency or an agent outside of you, that you interact with. (Dahlstedt, 2020)

Zum Abschluss nochmal George E. Lewis dazu:

Defining AI is defining thinking. Gilbert Ryle, in 1976 professor of philosophy at Oxford, he wrote about improvisation but he never mentioned music at all, which I think is an incredibly smart move: The idea behind that is quite interesting: what it means to think, it means to improvise. So that means, that artificial intelligence could be a form of artificial improvisation. (Lewis, 2020)

With thanks to: Marcus Schmickler

- 1

The basic texts of cybernetics are in turn the results of anti-aircraft defense research and automated target control during the Second World War; topics to which Wiener devoted himself together with Claude Shannon.

- 2

https://www.pearson.com/en-gb/subject-catalog/p/prolog-programming-for-artificial-intelligence/P200000003804?view=educator Status: 15.03.2021

- 3

AI stands for artificial intelligence.

Anke Eckardt

Anke Eckardt is an artist and professor of fine arts at the University of Art and Design Graz. She was born in Dresden in 1976 and has lived in Graz and Vienna since 2023, having worked in Berlin, Los Angeles, Cologne, Kassel and Rome. In her expansive work, she works with sound in intermedia connections, creating audiovisual installations and sculptures that she exhibits internationally. Anke Eckardt also contributes to the further development of discourses through an artistically explorative perspective.

Article topics

Article translations are machine translated and proofread.

Artikel von Anke Eckardt

Anke Eckardt

Anke Eckardt